What happens

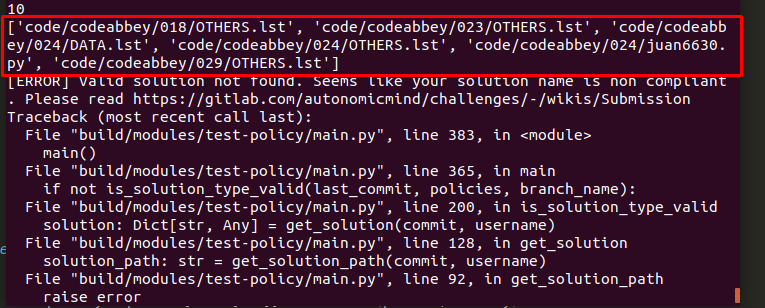

I’m having problems with test_policy again mainly with challenges of type vbd, I want to understand why it is not counting the challenges but it still accepts the test

What do you understand or find about that problem

Previously I had problems with test_policy due to the structure that these challenges must have and thanks to @pastel-code I was able to understand a little more here.

Now I think I am having the challenges well structured but I keep getting the same errors

You make any workaround? What did you do?

I have seen that this problem has happened to many users and there is a lot of information about it to be able to solve it by yourself but still the information is confusing, for example, @pastel-code says the structure should be

site -> challenge -> feature/evidence

And in this way it will coincide with the structure described in https://gitlab.com/autonomicmind/challenges/-/wikis/structure but an approver also pointed to another user with the same problem as me in https://gitlab.com/autonomicmind/challenges/-/issues/269 that the structure should be in this way

make in only 1 folder with this structure <CWE>-<ToE>-<vulnerability>

Coincidentally the errors of most users are when trying to push hack challenges I think I have the correct structure, however I think it is due to the configuration of the test_policy, reading a bit the code that manages the test I see a function that gets the deviation

def get_deviation(solutions: Dict[str, int], policy: Any) -> int:

code_active: bool = policy['code']['active']

hack_active: bool = policy['hack']['active']

vbd_active: bool = policy['vbd']['active']

code_to_hack: int = abs(solutions['code'] - solutions['hack']) \

if code_active and hack_active else 0

code_to_vbd: int = abs(solutions['code'] - solutions['vbd']) \

if code_active and vbd_active else 0

hack_to_vbd: int = abs(solutions['hack'] - solutions['vbd']) \

if hack_active and vbd_active else 0

return max(code_to_hack, code_to_vbd, hack_to_vbd)

And from what I see of that function it seems that some cases are missing, like:

vbd_to_code

hack_to_code

vbd_to_hack

I also think that there is a bug in having certain numbers in the deviation variables of the challenges because like the user here currently I am having the same problem with the same composition of solutions and when trying to push a hack challenge too (this my job)

Again I say it may be my structure but reading a bit of the code I see use of functions that tend to have undefined behaviors such as abs()